Autonomous Driving B3RB-buggy

Advanced Autonomous Driving System for NXP-AIM'24

[Code]

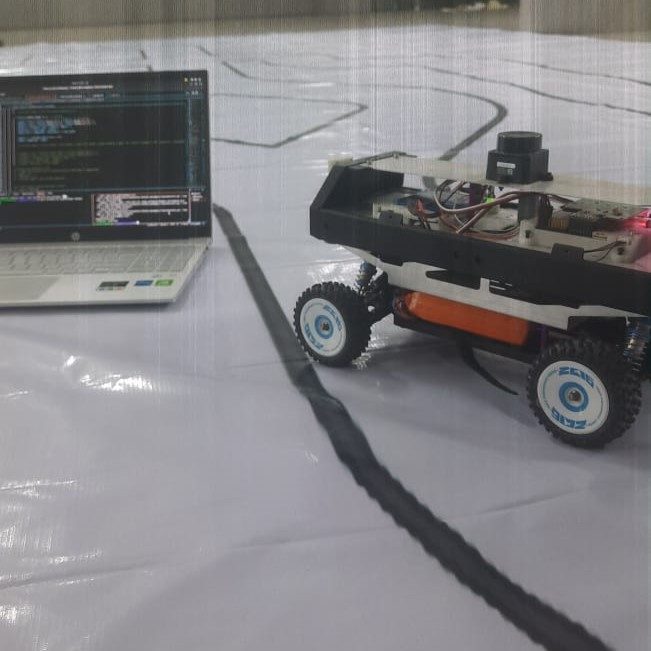

The B3RB Autonomous Driving project represents a comprehensive exploration of robotic perception and autonomous navigation, developed as part of the NXP-AIM’24 challenge. Our team successfully created an intelligent robotic platform that demonstrates advanced capabilities in real-time environmental interpretation and autonomous decision-making.

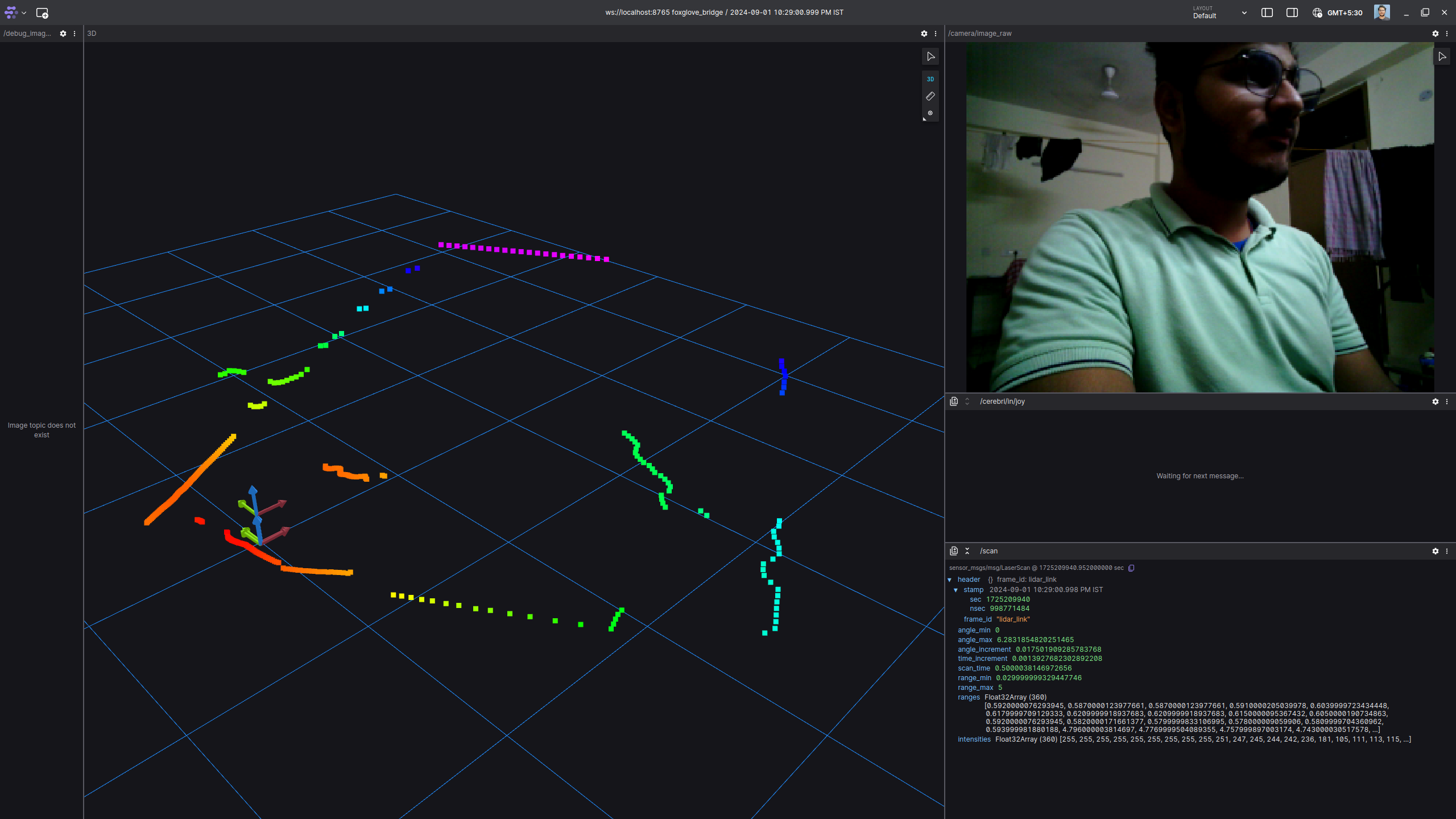

Project highlights: LIDAR-based perception and dynamic Object Detection.

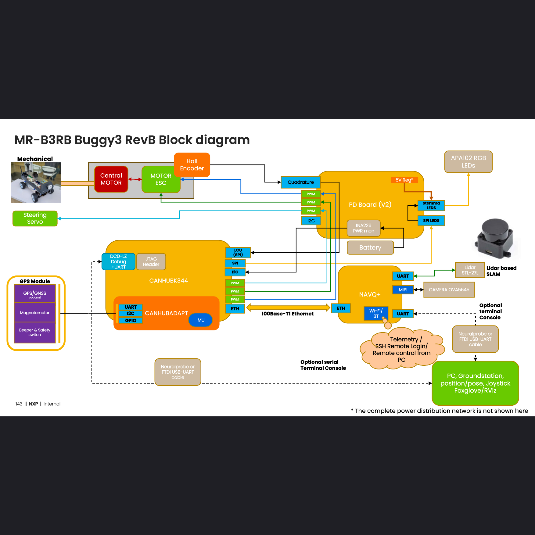

Technical Architecture

The autonomous system was built on a robust technological stack, integrating multiple advanced perception and control mechanisms:

- Computational Platform: Mini computer running ROS2 on Ubuntu 22

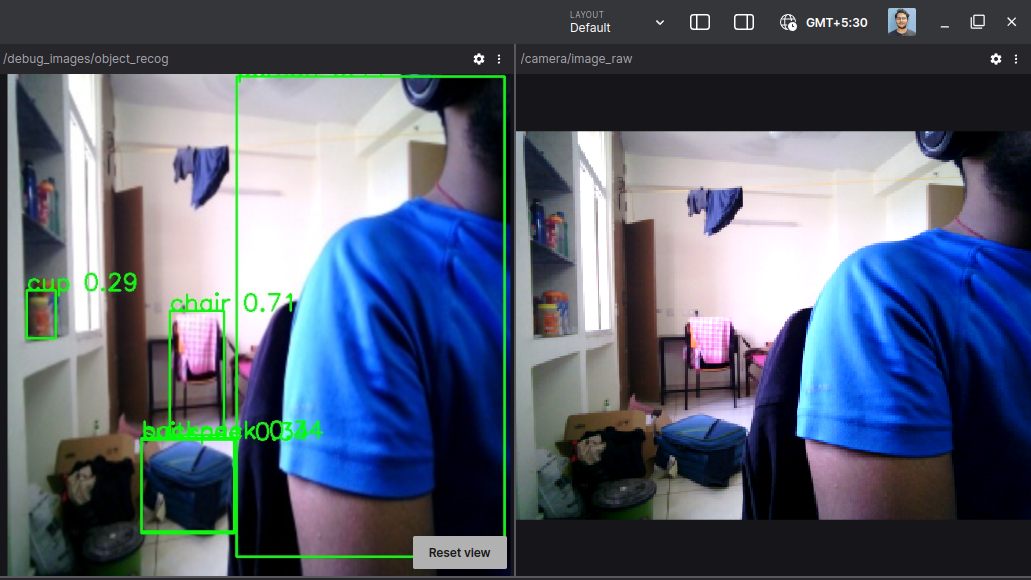

- Perception Systems: . - LIDAR for environmental mapping . - Camera for lane detection and traffic sign recognition

- Control Mechanism: Ackermann-steering control for precise navigation

- Machine Learning Model: YOLOv5s with INT8 quantization

Key Achievements

- Performance Milestone: Achieved an impressive 1:42 track time

- Real-time Inference: Maintained 7 Hz processing speed

- Advanced Perception: Implemented comprehensive obstacle detection and lane tracking

- Optimization: Utilized NPU-optimized model for efficient processing

Detailed views of track performance metrics and system architecture.

Note

The grand finale videos are unavailable due to NXP-Semiconductors office regulations.